Don't believe everything you see on the internet.

I had a periodic reminder of this phrase last week.

With algorithms pushing out hot content in front of millions, misinformation on the internet travels faster than ever before.

Backed by a couple of fake screenshots and riding the current trends, a well-crafted fake tweet could gain millions of views in a day, tarnishing the judgment of a significant number of people.

I've fallen for this trap too many times to now be more sceptical of any hot topic or influencer advice I see on the internet.

In this blog post, I'll talk about why you should take content on social media and generally on the internet with a pinch of salt and how you can leverage a simple guiding principle to avoid being misinformed.

Let's start with:

Google’s recent generative AI controversy

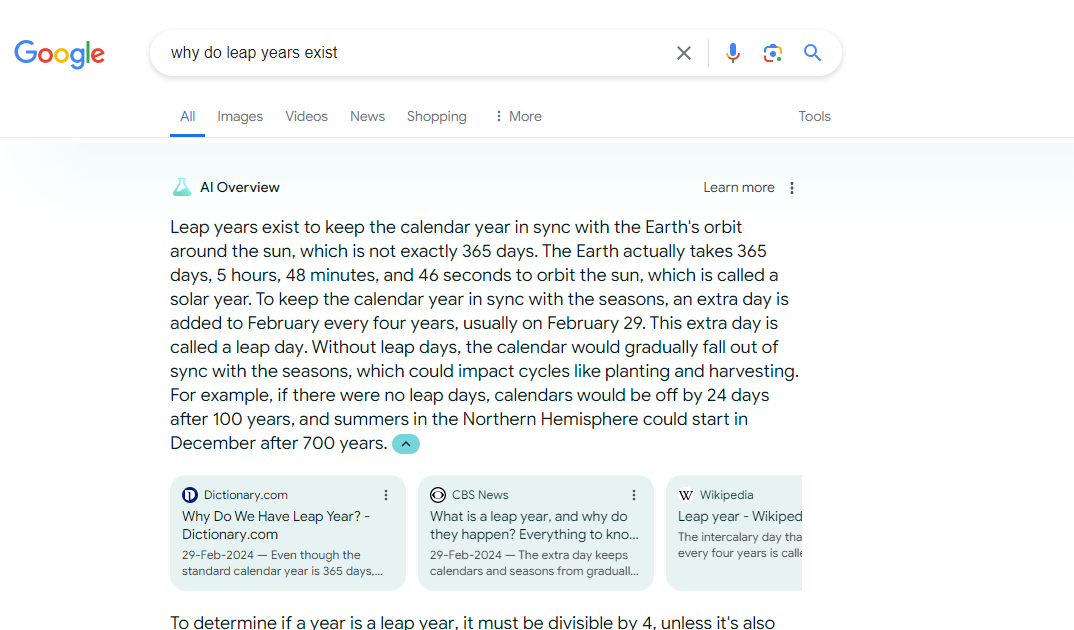

Google introduced a new generative AI feature called AI Overview that sums up the results of a regular Google search and gives a concise answer at the top of the page, much like Perplexity or Arc Search would do:

All links from which AI Overview has derived its answer are cited below the generated answer for you to refer to.

Fundamentally, this could be a fantastic extension to Google Search's existing featured snippets and help get succinct summarised answers from more than a single source to simple queries like “Can I heat avocado toast?”

But, here's a problem that some of the early public testers of this feature encountered:

Google's new AI Overviews returned useless and borderline dangerous answers to some obscure questions like “How many rocks should I eat?”:

I couldn’t believe it before I tried it. Google needs to fix this asap.. pic.twitter.com/r3FyOfxiTK

— Kris Kashtanova (@icreatelife) May 23, 2024

While this was a hilariously wrong answer, I couldn't help but notice the attribution to geologists at UC Berkeley. This is enough to make anyone go “Wait, what?” no matter how wild the statement might be.

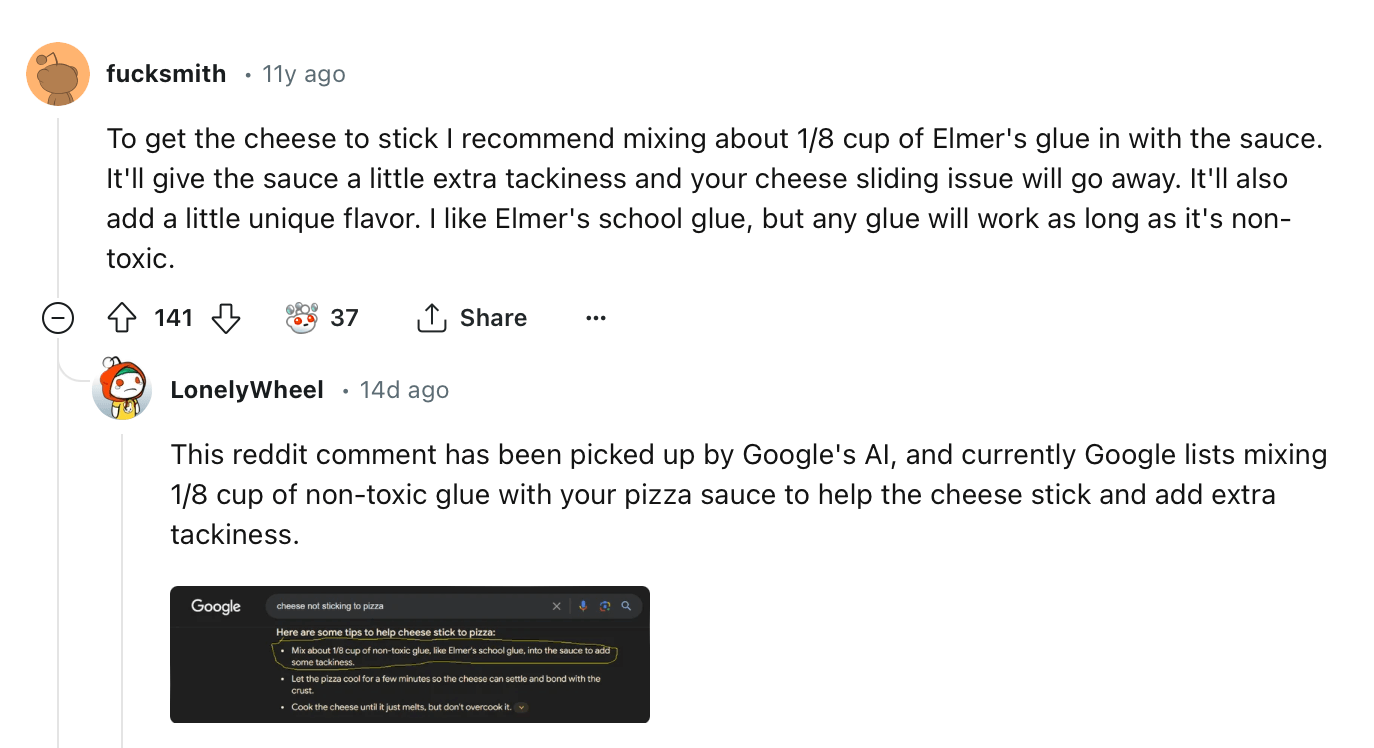

In another example, AI Overview suggested adding non-toxic glue to pizza sauce to make the cheese on top stick to the base and not slide off:

Google AI overview suggests adding glue to get cheese to stick to pizza, and it turns out the source is an 11 year old Reddit comment from user F*cksmith 😂 pic.twitter.com/uDPAbsAKeO

— Peter Yang (@petergyang) May 23, 2024

But, why?

Large language models or LLMs like Google's are exceptional at combing through hundreds of terabytes of data and predicting the next word in sequence.

This helps them dig into a vast knowledgebase of existing data and assemble answers to a user query from bits of relevant information it was trained on and sometimes, from the live internet, too.

While this process gives factually correct and helpful answers most of the time, these models can blatantly spit out incorrect or even wild answers without hesitation for many reasons including bad information sources.

In the example where AI Overview suggested to add glue to pizza sauce, it picked up and misunderstood a sarcastic Reddit comment as a genuine answer to the question:

In the other example, where Google's AI Overview said it was okay to eat rocks every day, the answer was backed by a satirical blog post which was derived from an article from The Onion.

The Onion's posts are an attempt at humour rather than being factually correct blog posts. The whole website is made for entertainment, not education.

Unfortunately, the AI models might or might not know that. Google search got confused because its initial source, ResFrac, seemed genuine, and therefore, it cited factually incorrect and dangerous advice as a real statement.

This is not only Google search's problem, though.

Hallucinations and inaccurate answers have been a long-standing problem with all generative AI tools like ChatGPT and Claude.

During my use, both ChatGPT and Claude have often blurted out seemingly genuine but completely nonsense answers.

Anyway, Google's AI Overviews were definitely a fail in the instances we discussed above and is a model case for not trusting everything on the internet, but how people on social media reacted was far more reckless.

Social media algorithms today reward people for crafting engagement baits on their platforms.

If your tweet or reel gathers a decent amount of eyeballs after you post, algorithms will carry your content to thousands or even millions of people.

This incentive, though fundamentally fine, often leads people to say facts or post content that is factually incorrect but sparks a debate and attracts engagement.

Google's AI Overview launch met the same fate.

People started morphing the actual answers from their searches to funny and sometimes recklessly dangerous responses, and then posting them on their social media accounts.

As these posts spread like wildfire, more people tried to bankroll on the engagement by reposting the popular posts or by coming up with their version of ridiculous answers:

People were not only throwing mud on Google, but they were, I hope unintentionally, spreading dangerous advice over the internet.

An answer like the one above, when pulled out of context and backed by thousands of likes and Google's reputation can effortlessly make people question their assumptions and stray towards misinformation.

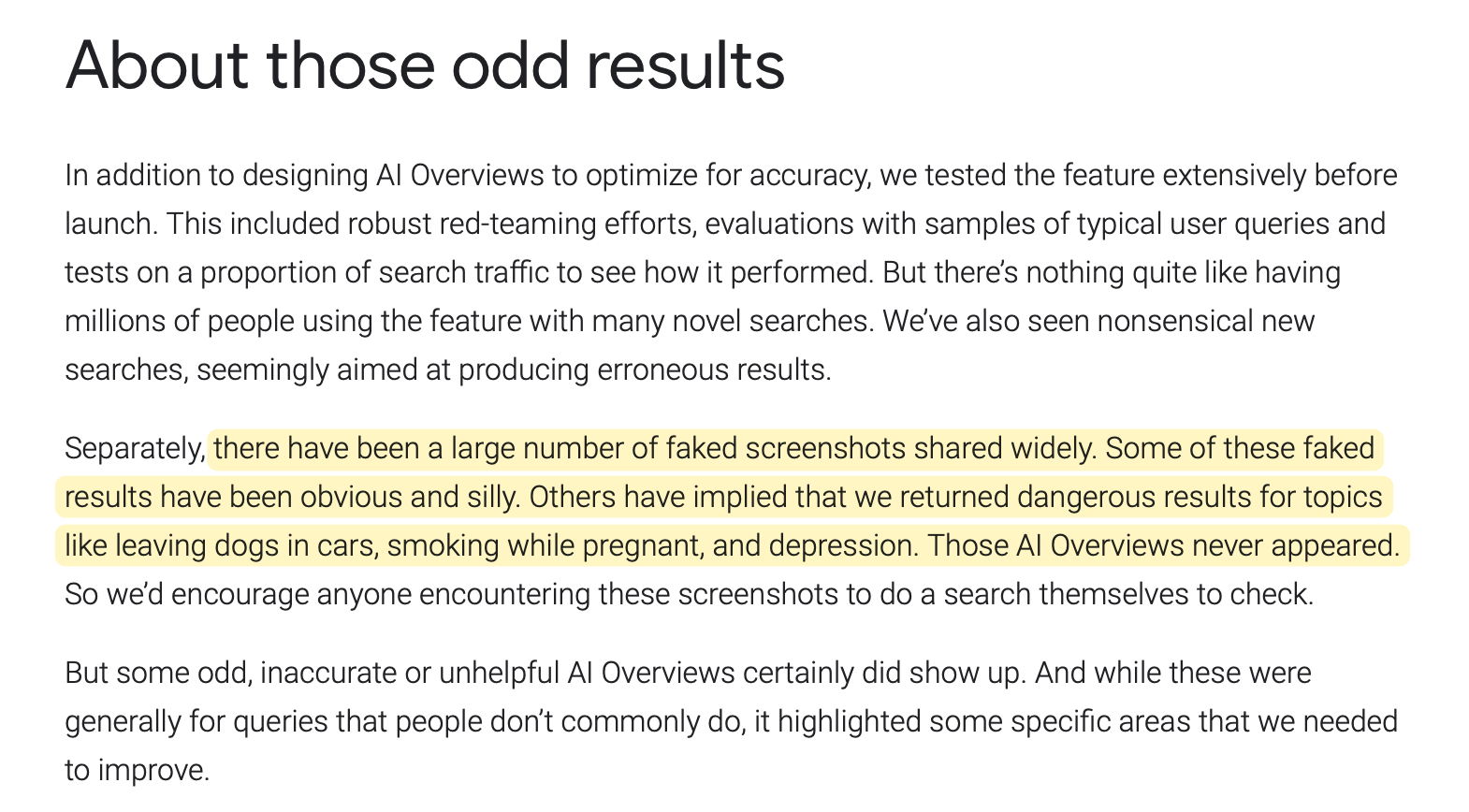

Google later refuted most of these alleged AI Overviews, like the one above, in a public statement, but the problem had spread too far by then:

One mega thread of mostly fake AI Overviews has over 398,000 likes and 34.6 million views across the platform making thousands of people question Google's competence in creating a safe AI.

But here's the thing:

LLMs from companies such as Google, OpenAI and Anthropic are rigorously safety-tuned to the extent that it's almost unlikely they will respond with dangerous advice like the one above.

But with our short attention span on social media posts, and the urge to react to something sensational, we often overlook the fundamentals and fall for baseless news, therefore, affecting our judgment.

And, social media is not the only place where misinformation surfaces. Chats with AI bots, group chats on WhatsApp, political campaigns and even face-to-face conversations with other people are areas where rumours and misleading facts can brood.

But, what's the big deal?

Let's explore this angle through a personal story involving:

Washing machines and lint

Around eight years ago, I moved to Bangalore with a few school friends for our new jobs in the software industry.

We shared our accommodation to split the rent and did a couple of our chores like laundry and grocery shopping together.

After doing a few rounds of laundry in the shared washing machine in the building, I started noticing an absurd amount of lint on my clothes after every wash.

I didn't use a washing machine before this, so I had limited knowledge of how a washing machine functions.

Confused, I asked my roommate about this recurring problem. It was annoying to scrape off lint from my clothes after each wash.

When asked, my friend confidently told me that this is how it works. He said, “You can't have lint-free washes in a washing machine.”

It felt weird, but given my limited knowledge, I trusted him and made peace with this problem.

Then, around a year later, one of my friends who lived in the same building showed me that you need to clean out the filter after every wash to circumvent this problem. It was a shared machine, and people didn't bother to clean the machine filter after they were done washing their clothes.

For almost a year, I wore clothes sprinkled with someone else's lint. An absolute facepalm moment.

Now:

This was a minor inconvenience, but think of the times you might've been influenced by a YouTube video or a popular news article into buying a health supplement or a product that didn't quite work as advertised.

At best, you lose a few bucks trying a new thing. But it could be far worse if the recommendation affects your health, or cause significant financial damage.

In a modern world filled with influencers who are trying to sell us questionable products or rack up followers with half-baked preaching, we need to approach everything we read or see on the internet with a bit of scepticism.

And to do that:

Sagan’s Standard will be your best ally

Sagan's Standard, or ECREE is an aphorism popularised by the renowned scientist Carl Sagan, which goes like this:

Extraordinary claims require extraordinary evidence

Originally devised for the scientific community, this litmus test helps question unrealistic advice and take what you come across with a pinch of salt.

The claim, however, doesn't have to be extraordinary for Sagan's standard to work.

We can hold any claim or fact that makes us even a wee bit sceptical up to Sagan's Standard and demand to see the evidence behind it.

For example:

When I was researching more about certain Japanese concepts for my book, I took help from both Claude and Perplexity to help bind unrelated ideas to these topics.

Claude, sometimes, made up ridiculously wrong answers which would make me confused for a few seconds. The answers were so confident that I questioned my understanding of the topics, and whether I've misunderstood the philosophy all this time.

Upon deeper reflection, the answers seemed far off from everything I've learned on this topic so far.

A quick comparison between Claude's answers and the ones I received from Perplexity paired with a good-old Google search for the same question revealed Claude's inaccurateness.

Regardless of whether you deal with hallucinating AI chatbots every day or take advice from friends who confidently spurt out half-baked advice to look cool, you'll come across extraordinary claims many times in your life.

Sagan's standard acts as a guideline or reminder to question the basis of what you see on the internet or hear from others without gobbling everything up.

One simple step to embodying this principle is to:

Trust, but verify

The first step is to not accept any claim or advice at face value if it doesn't feel right.

A quick 5-minute research is often enough to address any baseless claims on social media or ones shared during interpersonal conversations.

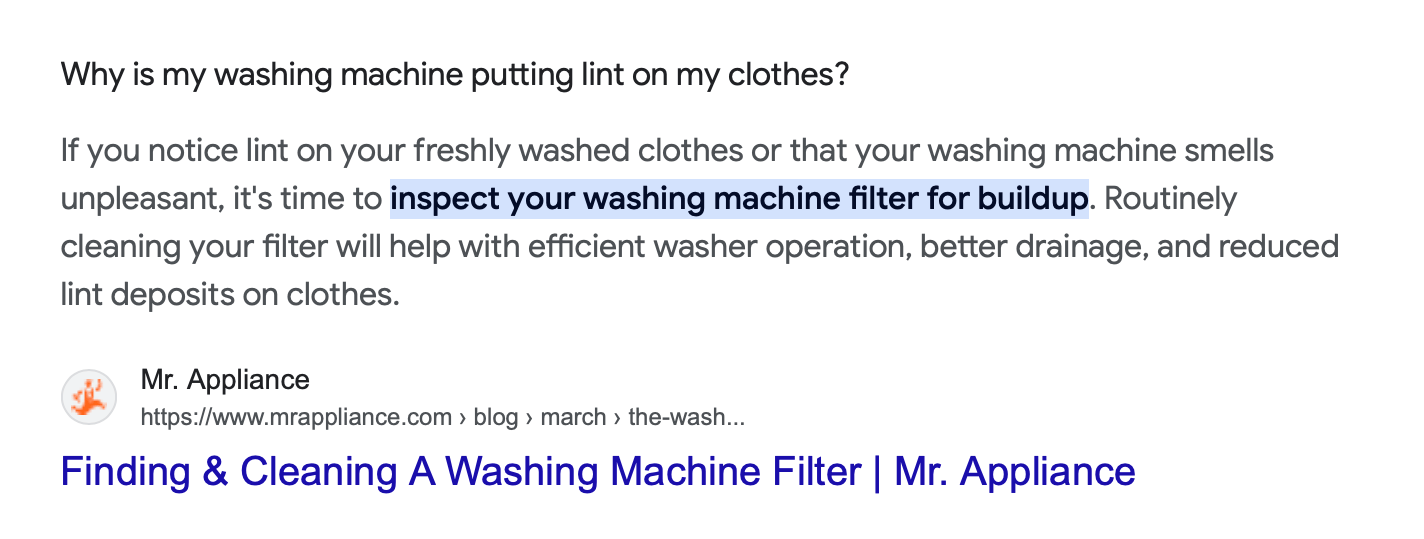

Taking my lint on clothes problem as an example, I could've debunked my friend's claim with a 2-minute Google search, like this:

This approach, however, only works as long as the underlying content is solid.

Remember the couple of AI Overviews we discussed earlier where AI summarised false information?

While there isn't much you could do apart from experimenting with the suggested options for these minor daily questions, when it comes to questions on health and psychology, it doesn't hurt to be a bit more cautious.

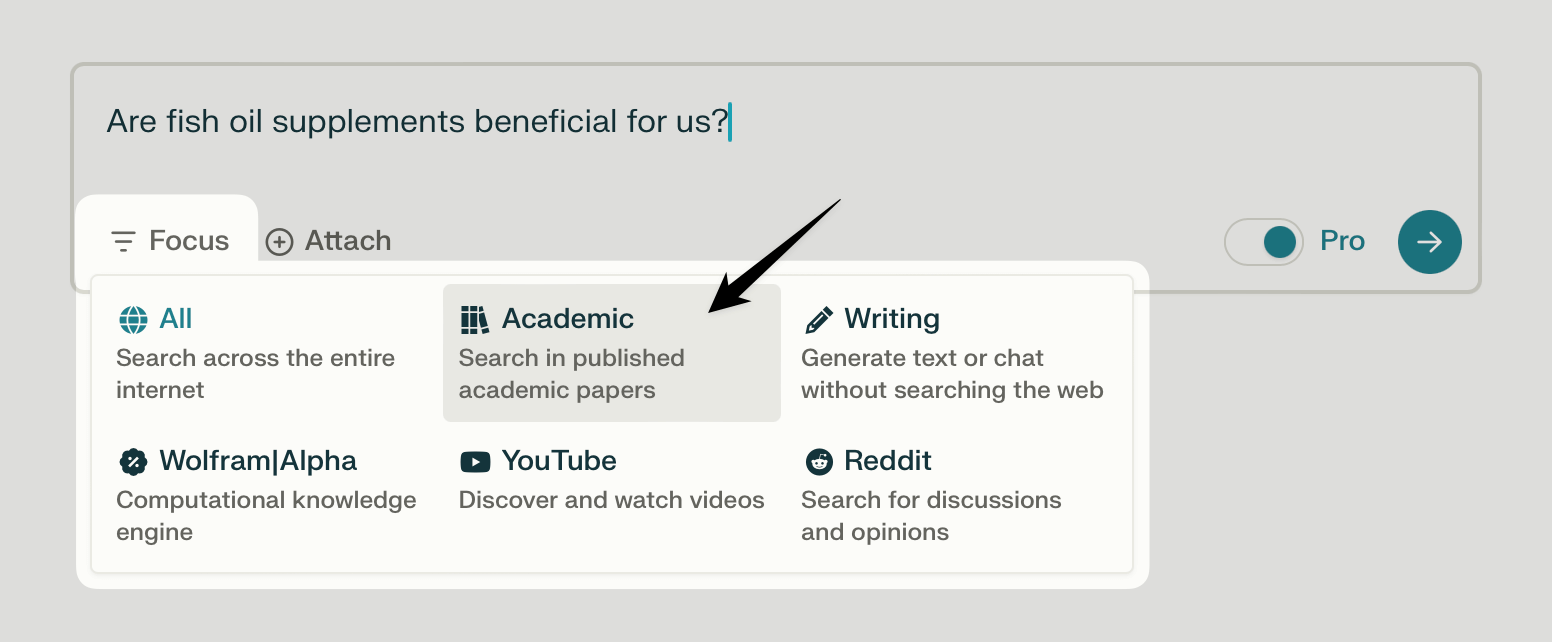

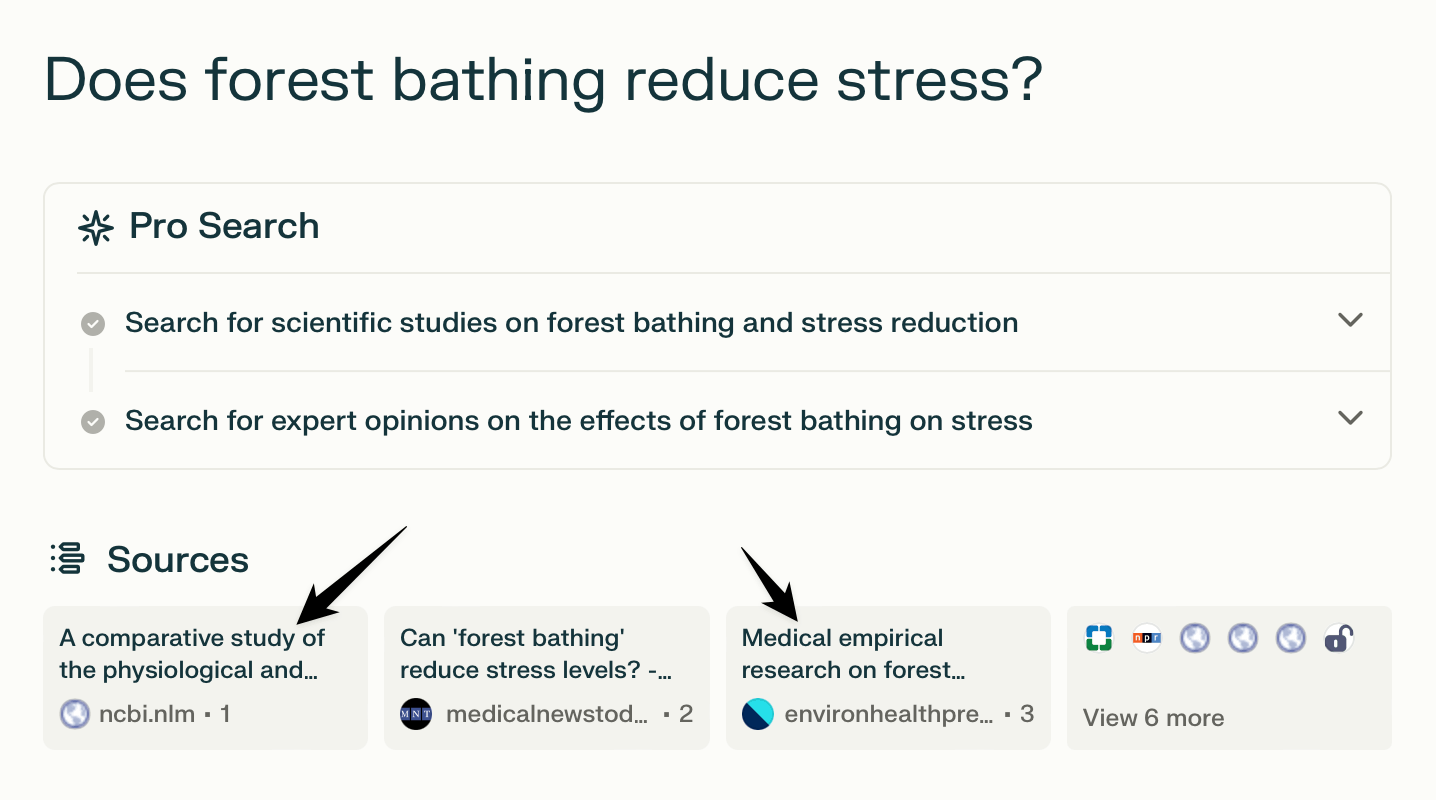

A quick approach to debunking any health myths on the internet, or even through word of mouth is searching for it directly on a tool like Perplexity, and focusing on academic journals for the answer:

Now, academic journals are well-vetted sources of reliable information on various topics, but they can be difficult to comprehend.

Perplexity, or any AI tool that has real-time access to research journals can bridge that gap by breaking down complex findings into easy-to-understand statements:

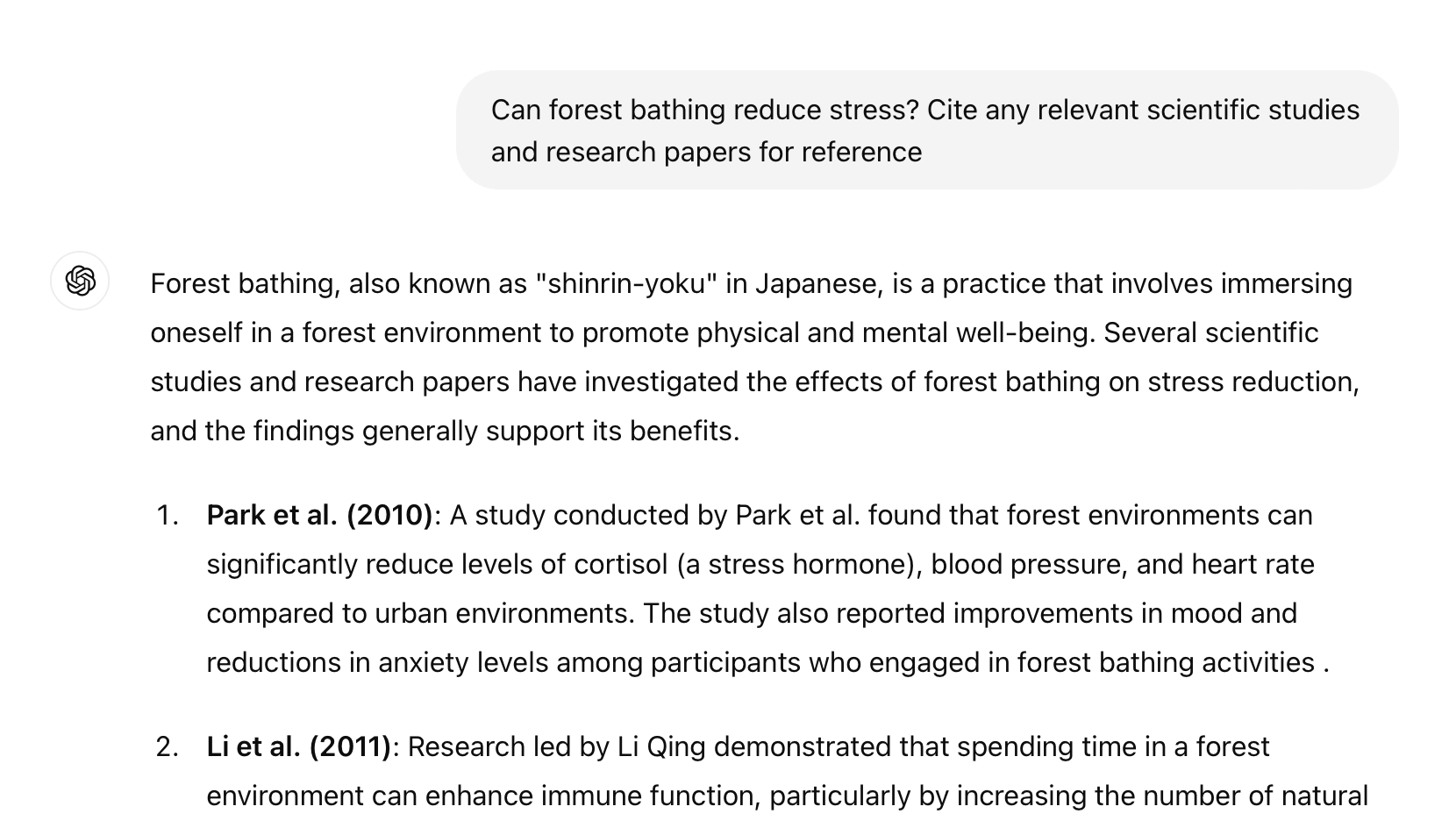

Even ChatGPT can cite its sources if you ask it to:

ChatGPT included a footnotes section below the answer citing the exact research paper for reference:

This is handy because now you can cross-check whether ChatGPT hallucinated, or provided a factually correct answer.

Twitter's community notes are another stellar source to debunk rumours and engagement baits.

Whenever I see a tweet that's a bit hard to digest, I check if it has community notes attached to it:

Often it does, and the notes clear up confusion and add important context to the original claim that the author missed, or conveniently left out.

Now:

Cross-referencing everything you see on the internet is tedious and not feasible.

Sagan's standard plugs in here to help you understand where to put your detective hats on:

The wilder the claim is, the more compelling the evidence should be.

This advice helps me understand the gravity of the claim and its impact on me first, and then choose whether to ignore it, lightly browse for references or dig deeper into the claim.

News like Gmail shutting down is big, directly impacts my email inboxes and is therefore not something I could casually ignore. This is worth cross-checking with reliable sources such as The Verge or NYTimes.

Whereas a celebrity rumour, or the latest Donald Trump scandal is not worth spending even 15 minutes digging for sources to me. Even if they're true, they're unlikely to impact my day-to-day operations.

You have to strike a balance between what to research further based on how much the news or the information affects you.

The methods are straightforward, but remembering the principle of Sagan's Standard helps trigger the much-needed scepticism while dealing with half-baked advice over the internet and in daily conversations.

Remember:

Extraordinary claims require extraordinary evidence.

Thanks for reading. Articles like this one take hours to research, write and publish. If you've found this article helpful, consider supporting my work by buying me some coffee.

In-depth articles, series and guides

In-depth articles, series and guides