Part of The Making AI Work Series

In Part I of the Making AI Work series, we discussed how generative AI works, how to pilot this tool to get workable answers and where not to rely on generative AI.

In Part II, we'll look at generative AI through the lens of working with information every day and figure out ways to:

- Quickly find the information we need

- Interact with information we come across spontaneously

- Easily work with data and documents we've gathered on a theme or project

- Make sense of complicated business and personal metrics without being a math genius

This part of the series aims to show you how to navigate today's information overload and differentiate the signal from the noise using the various AI tools available.

For simplicity and the sake of our wallets, we'll prefer general-purpose tools such as ChatGPT, Claude, and Perplexity and only use specific tools when necessary.

The concepts and the workflows will rarely be tool-specific, so you can adapt the techniques to the tool of your choice.

Let's start with:

Finding information quickly and sensibly

The starter approach to using generative AI to find information is asking it everyday questions, similar to how we do with traditional search engines.

For example, asking “How do I refill a fountain pen?” in ChatGPT gives us this standard guide:

However, the problem with generic questions is that we get generic answers in return.

In Part I, we learned that LLMs answer better when we write questions or instructions more descriptively because it helps them establish patterns and accurately find what we're looking for.

Therefore, rephrasing the original question to include details of your fountain pen helps ChatGPT answer more precisely, like this:

The more descriptive your question is, the more on-point the answer will be.

As Stephen Covey would say:

Start with the end in mind.

Before writing your question or instruction, imagine how you would like the answer to look and what perspectives or angles you'd like it to cover. Include everything you need from the answer in your original question.

For example, the prompt “Explain the Japanese concept of Kaizen from a personal growth standpoint, and tell me how it can help me in my life as an individual” is much more likely to get you a personalised answer than “What is the Japanese concept Kaizen?”:

Being descriptive helps us get more focused and workable answers that are genuinely helpful.

But what about comprehension?

If you've used ChatGPT, Gemini or even Claude, you might've noticed that their answers could sometimes be robotic and like paragraphs extracted from a college textbook, which can be a pain to comprehend when trying to digest a complex topic or something outside your expertise.

Thankfully, we can fine-tune how an LLM answers us without tinkering with technical configurations.

Instead of asking a generic, “What is a global shutter in cameras?” and receiving a standard, textbook answer:

You can instruct ChatGPT, Claude or any other LLM to assume a role or persona while answering your question.

For example, instructing ChatGPT to act as an experienced photographer who can teach students in easy-to-understand terms transforms the previous answer into a more digestible and relatable one:

If you have a popular instructor in mind, you can direct ChatGPT or Claude to act like your model teacher instead of describing their qualities. These LLMs have likely been trained on materials from popular public figures and can identify and answer in their style.

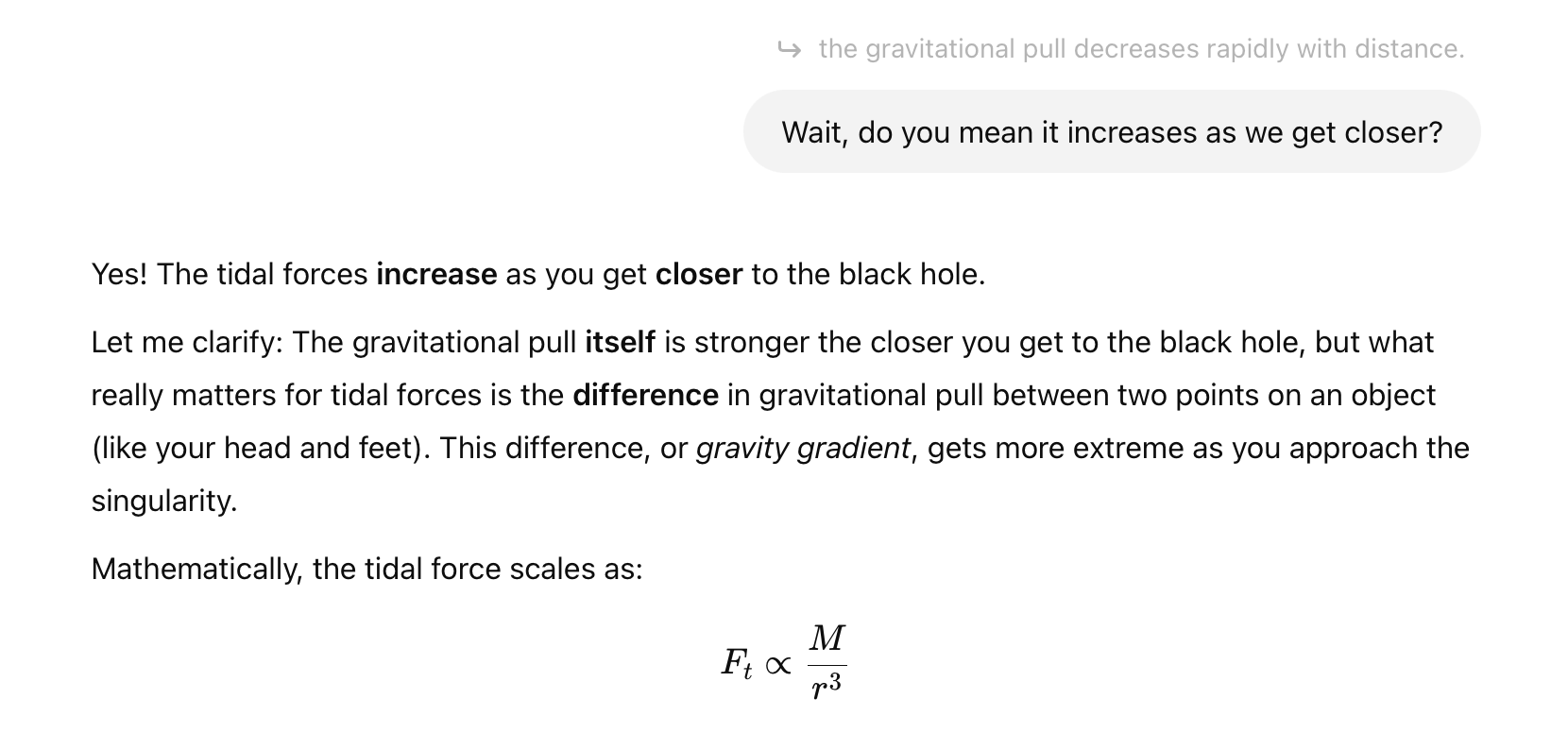

For example, asking ChatGPT to act like Neil deGrasse Tyson or Richard Feynman, both of whom are known to explain complex scientific topics in an accessible format, fine-tunes ChatGPT to explain the concept of singularity in a way we could easily understand:

But personas aren't the only way to get answers in a more digestible format.

For more abstract topics, like the minimax strategy, you can ask ChatGPT or Claude to explain a concept through real-world analogies or stories:

With this directive, ChatGPT explains the minimax strategy in as simple and relatable terms as possible, whereas a generic question like “What is minimax strategy?” would've directed ChatGPT to a more standard answer:

The generic textbook definition is sometimes enough, but I've often found it easier to understand abstract or complex topics when illustrated with real-world examples.

Now, one of the best qualities of these generative AI apps, which traditional search engines lack, is the ability to accept follow-up questions.

This is a better interface to research because we can converse with these tools as naturally as we would with another knowledgeable fellow human and gain a thorough understanding of a topic or subject.

So, a follow-up question on the minimax strategy explains the strategy in a broader light, keeping in mind what we have already discussed in the ongoing thread:

Moreover, we can get answers even if no one has written articles or papers directly addressing our questions.

Therefore, even if there is no article on the Internet about applying the minimax strategy to personal growth, LLMs can synthesise an answer by leveraging their training knowledge and connecting the dots between these two subjects.

This opens the door to test our hypothesis and clear our understanding by asking any doubts or questions that come to mind:

Now, to make follow-ups easier, while using models like GPT 4o in ChatGPT, selecting text from the answers shows an annotation popup like this:

This is helpful because we can select a portion of the answer we're interested in and click on the annotate button to include this context in our follow-up question:

Anything we ask in our follow-up will take the annotated text as a reference, and ChatGPT will narrow down its search scope, thus answering more precisely:

With other tools, such as Claude, you can copy-paste text from an answer to quote it as a reference.

While interacting directly with LLMs will suffice in most cases, they fall short when we ask about current events and can even confidently fabricate facts or definitions — otherwise known as hallucinations.

ChatGPT and Gemini might search websites when they lack knowledge on a topic, but it's not a guaranteed process. They might just make things up.

Fortunately, there's a way to get around this problem.

In-depth articles, series and guides

In-depth articles, series and guides

Making AI Work

Making AI Work